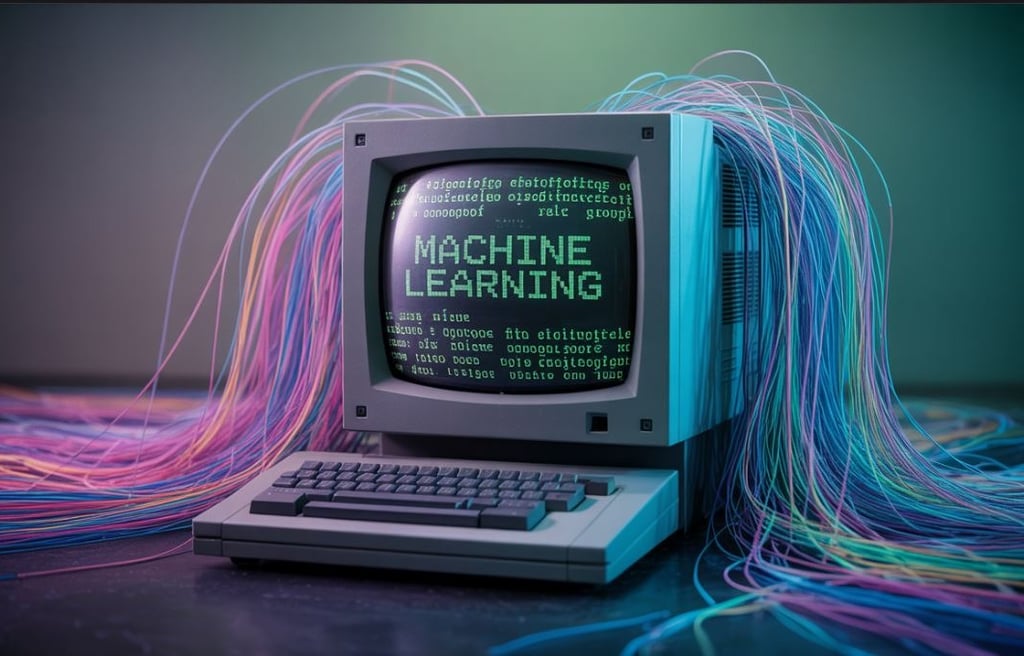

Machine Learning in 2025: Algorithms, Challenges, and the Future of Artificial Intelligence

Machine Learning is reshaping science, business, and society. From deep learning to neuromorphic AI, explore the technical foundations, challenges, and cutting-edge research that define the present and future of ML.

Why Machine Learning Still Matters

It’s tempting to assume that machine learning (ML) has already peaked. After all, we live in a world saturated with recommendation systems, chatbots, and generative art. Yet, behind every flashy demo lies a set of mathematical principles and computational architectures that continue to evolve at a staggering pace.

The modern ML revolution arguably began in 2012, when AlexNet, a deep convolutional neural network, crushed the ImageNet competition by halving the classification error rate. This moment catalyzed a new era: GPUs became the new microscopes of science, and neural networks shifted from academic curiosities to industrial infrastructure.

Since then, ML has transformed into the intellectual backbone of contemporary artificial intelligence. It is not just a toolkit for automating tasks; it is a paradigm for extracting knowledge from high-dimensional data and modeling complex systems. In 2025, ML research is no longer about proving that neural networks work — it is about scaling them responsibly, making them interpretable, and expanding them into domains once thought untouchable.

The Technical Foundations: Beyond the Basics

At its core, ML is the art of approximating functions. It is the process of finding a mapping

𝑓 : 𝑋 → 𝑌

f:X→Y that generalizes well from limited observations. While this framing may sound abstract, it underpins virtually every breakthrough in the field.

Supervised Learning remains the most widely applied paradigm. By training on labeled data, models learn to map inputs to outputs: classify tumors in medical scans, translate text across languages, or predict stock market volatility. The strength of this approach lies in its directness, but it is constrained by the availability (and quality) of labeled datasets.

Unsupervised Learning tackles the problem of uncovering latent structure without explicit labels. From clustering users in marketing analytics to discovering new materials in chemistry, these methods reveal patterns that human intuition often misses. The emergence of self-supervised learning — a paradigm in which data generates its own supervision — has blurred the line between “unsupervised” and “supervised,” powering today’s most advanced foundation models.

Reinforcement Learning (RL) introduces dynamics and decision-making into the equation. Here, agents interact with environments, receiving rewards or penalties as feedback. RL has given rise to some of the most iconic milestones in AI, including AlphaGo’s historic victory over world champions. Its potential now extends far beyond games, from autonomous robotics to adaptive resource allocation in energy grids.

One of the most persistent dilemmas in ML is the bias-variance trade-off. High-bias models oversimplify reality, missing essential patterns. High-variance models overfit, memorizing noise rather than learning generalizable rules. Navigating this trade-off is not merely academic: it determines whether a self-driving car misclassifies a pedestrian or whether a medical AI safely recommends treatment.

The Engine of the Present: Deep Learning

If machine learning is the field, deep learning (DL) is the engine driving its exponential growth. Deep architectures have redefined state-of-the-art performance across vision, language, and multimodal tasks.

Convolutional Neural Networks (CNNs): These architectures leverage local connectivity and weight sharing to capture spatial hierarchies in data. They power everything from facial recognition systems to satellite image analysis. In healthcare, CNNs are approaching radiologist-level performance in detecting early-stage cancer from medical scans.

Recurrent Neural Networks (RNNs) and LSTMs: Before the transformer era, RNNs dominated sequential data processing. Long Short-Term Memory (LSTM) units addressed the vanishing gradient problem, enabling breakthroughs in speech recognition and early natural language processing.

Transformers: Introduced by Vaswani et al. in 2017, transformers revolutionized ML by discarding recurrence in favor of attention mechanisms. Attention allows models to weigh the relevance of input tokens relative to each other, dramatically improving efficiency and scalability. This architecture underpins GPT, LLaMA, Gemini, and virtually every leading foundation model. The leap was not just architectural but philosophical: transformers transformed ML into a discipline of scaling laws. More data, more compute, larger models — and emergent abilities appear.

Today, large language models (LLMs) demonstrate capabilities in reasoning, coding, and even scientific discovery. Yet they also embody the central paradox of modern ML: unprecedented power coupled with opaque decision-making and resource intensiveness.

Beyond the Hype: Technical Challenges in 2025

Despite spectacular progress, ML faces formidable challenges that demand critical scrutiny:

Computational Cost: Training GPT-4 required an estimated hundreds of petaflop/s-days, with energy consumption rivaling that of small nations. This raises both economic and ecological concerns.

Generalization Beyond the Training Distribution: ML models excel within the statistical boundaries of their training data but stumble when faced with out-of-distribution inputs. This brittleness remains a core obstacle to deploying AI in safety-critical contexts like healthcare or aerospace.

Hallucination in Generative Models: LLMs and diffusion models generate fluent, coherent content, but often fabricate facts. While tolerable in creative domains, hallucination becomes dangerous in medical, financial, or legal applications.

Explainability and Trust: The “black box” nature of deep models remains one of the most active areas of research. Explainable AI (XAI) seeks to provide interpretable insights into model behavior, but balancing transparency with performance remains unsolved.

These challenges highlight a truth often overlooked in hype cycles: ML is not magic. It is mathematics, statistics, and optimization — constrained by the limits of computation and data.

Future Trends and Research Frontiers

As we look forward, several frontiers stand out as defining the next decade of ML research:

Lifelong and Continual Learning: Most ML systems “forget” previous tasks when trained on new ones, a phenomenon known as catastrophic forgetting. Achieving lifelong learning — akin to human adaptability — is a holy grail of AI research.

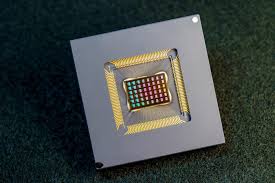

Neuromorphic Computing: Inspired by the efficiency of biological neurons, neuromorphic chips promise orders-of-magnitude reductions in energy consumption. Companies like Intel (Loihi) and academic labs are exploring hardware-software co-design to unlock brain-like efficiency.

Federated and Privacy-Preserving Learning: With rising concerns about data sovereignty, federated learning allows decentralized training across devices without aggregating sensitive data centrally. This approach could define the future of healthcare AI, where patient privacy is paramount.

Interdisciplinary Fusion: Perhaps the most exciting trend is the merging of ML with other sciences. From accelerating fusion energy simulations to decoding neural activity in brain-computer interfaces, ML is becoming a universal amplifier of scientific discovery.

Nerd-Worthy Applications That Redefine Science and Imagination

For the geek-minded reader, the true thrill lies not in chatbots but in the scientific revolutions ML enables:

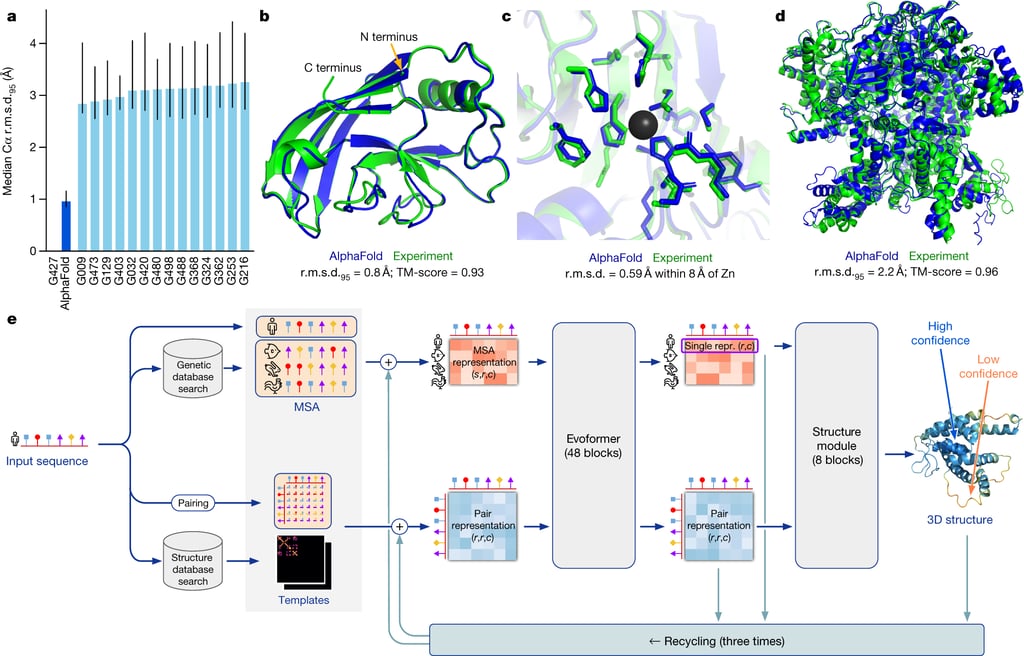

AlphaFold (DeepMind): A grand challenge in biology — predicting protein folding — was effectively cracked using ML, reshaping drug discovery and molecular biology.

Cosmological Simulations: ML accelerates universe-scale simulations, enabling cosmologists to test theories of dark matter and cosmic evolution faster than ever before.

Adaptive Robotics: Reinforcement learning is giving rise to robots that learn motor skills dynamically, paving the way for general-purpose robotics.

Procedural Game Design: ML-driven generative systems can create entire worlds, NPC behaviors, and narratives on the fly, transforming the future of interactive entertainment.

These applications show that ML is not just about convenience; it is about extending human cognition into realms previously inaccessible.

Conclusion: The Human–Machine Symbiosis

The trajectory of machine learning will not be characterized by a simple replacement of human capabilities, but by a profound co-evolution between human cognition and computational architectures. ML is, at its essence, a mirror of human reasoning: it amplifies our capacity for abstraction while simultaneously reproducing the cognitive and societal biases embedded in data. From a scientific standpoint, it represents the culmination of statistical inference and optimization theory — pattern recognition operating at scales previously unattainable — yet it also signals the emergence of computational paradigms that may transcend classical definitions of intelligence.

To reduce ML to hype is to ignore its epistemological significance: it is rapidly becoming the universal substrate for discovery, akin to electricity in the industrial age or the microscope in biology. However, uncritical adoption risks entrenching systemic biases, exacerbating inequalities, and imposing unsustainable ecological costs. The grand challenge of our generation is therefore dual: to rigorously advance the mathematical and computational foundations of learning systems, while embedding them within ethical, interpretable, and energy-conscious frameworks.

If this challenge is met, machine learning will not merely automate tasks — it will expand the frontiers of science itself, catalyzing discoveries in physics, biology, and cognitive science. The future will not be defined by “artificial” intelligence replacing natural intelligence, but by a symbiotic continuum where both co-evolve, generating entirely new forms of knowledge.

Deep Dive Resources:

Vaswani, A. et al. (2017). Attention is All You Need. NeurIPS. - https://proceedings.neurips.cc/paper_files/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf

Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep Learning. MIT Press. - https://www.deeplearningbook.org/

Sutton, R. & Barto, A. (2018). Reinforcement Learning: An Introduction. MIT Press. - https://mitpress.ublish.com/ebook/reinforcement-learning-an-introduction-2-preview/2351/375

Marcus, G. (2022). The Next Decade in AI: Why We Need More Than Deep Learning. - https://arxiv.org/pdf/2002.06177